Introduction

In a 2016 joint statement with Facebook, Twitter, and Microsoft, Google pledged to tackle extremist content on its platform and take concrete, effective steps to limit the spread of terrorism-promoting propaganda.[1] The platforms all committed to flagging and deplatforming terrorist content through the use of a "Digital DNA" database of image hashes, effectively forming a united front that would see extremists removed from almost every major site on the internet.

Six years later, however, extremist content can still easily be found via Google's search engine, and in some cases is actively promoted by the company's algorithm. Using subject-specific search terms neo-Nazi and white supremacist content can be easily accessed, often from the first page of search results. Some of this content includes clear incitement to violence, and even manuals on making weapons and guidelines for extremist activism. Two neo-Nazi sites can be readily found via a quick Google search.

Neo-Nazi Websites Easily Found On Google

The ease of access to these sites on Google, which often appear on the first page of search results if a user searches for related keywords, opens up the sites to curious users who may accidentally find the site or search for it. Deplatforming the sites from Google's search algorithm, in line with the company's policies on hateful content, would significantly reduce traffic to the sites, severely limiting both sites' reach and accessibility.

The redirect method, through which users searching for harmful or extremist content can be directed to interventionist resources or anti-hate content, can be used effectively to combat extremism and disrupt the pipelines to extremist content online. A key element of this method is deplatforming the extremist content from Google Search, and making it significantly harder for users to find the content they are looking for. This has been done with great effect for an imageboard site that is used extensively by extremists and which has hosted livestreams of terrorist attacks and terrorist manifestos in recent years.

The following report outlines the ease with which a user can access neo-Nazi websites via a Google search.

YOU MUST BE SUBSCRIBED TO THE MEMRI DOMESTIC TERRORISM THREAT MONITOR (DTTM) TO READ THE FULL REPORT. GOVERNMENT AND MEDIA CAN REQUEST A COPY BY WRITING TO DTTMSUBS@MEMRI.ORG WITH THE REPORT TITLE IN THE SUBJECT LINE. PLEASE INCLUDE FULL ORGANIZATIONAL DETAILS AND AN OFFICIAL EMAIL ADDRESS IN YOUR REQUEST. NOTE: WE ARE ABLE TO PROVIDE A COPY ONLY TO MEMBERS OF GOVERNMENT, LAW ENFORCEMENT, MEDIA, AND ACADEMIA, AND TO SUBSCRIBERS; IF YOU DO NOT MEET THESE CRITERIA PLEASE DO NOT REQUEST.

Google's Policy on Hateful Content

Google has a clear policy which prohibits "content that promotes or condones violence or that has the primary purpose of inciting hatred on the basis of race or ethnic origin, religion, disability, gender, sexual orientation or gender identity, age, nationality, or veteran status," as outlined in a 2014 communique by then-Senior Manager for Public Policy Christine Chen on the company's Public Policy Blog.[2]

The policy on violence, hate and harassment is also outlined in the company's specific content policies for Google Search.[3]

These policies also specifically prohibit "content that promotes terrorist or extremist acts, which includes recruitment, inciting violence, or the celebration of terrorist attacks, a policy which both sites are in clear contravention of.

Neo-Nazi Website Is Easily Found On Google Search

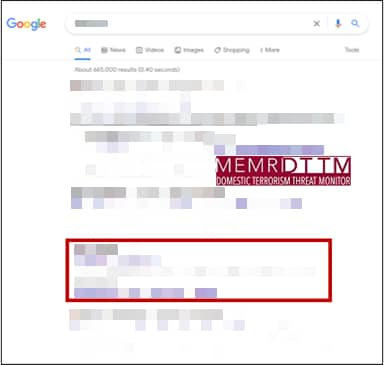

A neo-Nazi website can be easily found with a basic search on Google. Searching the site's name on the platform brings up about 665,000 results, the fourth of which is a direct link to the site's homepage.

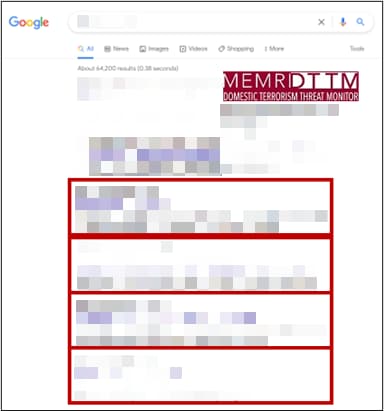

Searching for the site along with terms commonly used in the titles of videos hosted on the platform brings up even more results.

[1] Theguardian.com/technology/2016/dec/05/facebook-twitter-google-microsoft-terrorist-extremist-content, December 5, 2016.

[2] Publicpolicy.googleblog.com/2014/09/fighting-online-hate-speech.html#:~:text=We%20don't%20allow%20content,%2C%20nationality%2C%20or%20veteran%20status, September 23, 2014, retrieved June 30, 2022.

[3] Support.google.com/websearch/answer/10622781?hl=en#zippy=%2Chateful-content, retrieved June 30, 2022.

The full text of this post is available to DTTM subscribers.

If you are a subscriber, log in here to read this report.

For information on the required credentials to access this material, visit the DTTM subscription page